Deferred Rendering: Making Games More Life-Like

- Kamaldeep Singh

- Mar 26, 2020

- 8 min read

Updated: Dec 26, 2022

For a long time, graphic engineers implemented lighting in scenes using forward rendering or forward shading. Forward rendering is a direct approach where we render an object and follow it by lighting the object according to all light sources in a scene and then move on to rendering the next object. This process is repeated for each object in the scene. It is an easy process to understand and implement, but it exerts a toll on the performance parameter. Forward rendering is an intensive process and requires a high amount of processing since each rendered object has to iterate over each light source for every rendered fragment. Another downside of forward rendering is that it wastes fragment shader (FS) runs in scenes having a high depth complexity (wherein multiple objects cover the same screen pixel). This happens as a result of most fragment shader outputs being overwritten.

Another problem with forward rendering arises when there are multiple light sources. In that case the light sources tend to be rather small with a limited area of effect (else it will overpower the scene). But fragment shader calculates the effect of every light source, even if it is far away from a pixel. Calculating the distance from the pixel to the light source adds more overhead and branches into the FS. Forward rendering doesn't perform well with many light sources. When there are hundreds of light sources, the amount of computation the FS needs to perform increases manifold.

This is where deferred shading or deferred rendering steps in and tries to overcome these issues. Deferred rendering considerably changes the way we render objects. Deferred rendering provides several new options which significantly optimize scenes with large numbers of lights, allowing rendering of hundreds or even thousands of lights with an acceptable frame rate. The primary goal behind deferred rendering was to minimise the computer’s system resources via its pipeline mechanics. Whilst its counterpart, forward rendering, follows a more linear approach (which has its own advantages) deferred rendering solves certain scene complexity by first rendering the scene’s basic attributes such as depth, normals and diffuse colour. These attributes are stored in the G-buffer. Primarily, the G-buffer contains full screen rendering targets with various information being stored within them to produce a final image.

Below is an image comparison between deferred and forward rendering. A scene/view was rendered using the two types of rendering. Then, the view was split into two halves. The left half represents deferred rendering and the right half is depicting forward rendering. One can easily see a difference between the two. The left view has better detailing and shadow information and also looks more realistic due to the textures.

What is Deferred Rendering?

To better understand deferred rendering, we need to understand a couple of rendering concepts and terminologies.

1. Fragment shader - Fragment shader processes a fragment (data necessary to generate a single pixel's worth of a drawing) generated by rasterization into a set of colors and a single depth value.

The fragment shader is an OpenGL pipeline stage after a primitive (the basic elements of graphics output, such as points, lines, circles, text etc.) is rasterized. For each sample of the pixels covered by a primitive, a "fragment" is generated. Each fragment has a window space position, and a few other values, and it contains all of the interpolated per-vertex output values from the last vertex processing stage.

The output of a fragment shader is a depth value, a possible stencil value (unmodified by the fragment shader), and zero or more color values to be potentially written to the buffers in the current framebuffers.

2. Geometry shader - A geometry shader (GS) is a shader program that governs the processing of primitives. A GS receives a single base primitive and it can output a sequence of vertices that generates 0 or more primitives. This is unlike vertex shaders, which are limited to a 1:1 input to output ratio. A GS can also perform layered rendering, where different primitives can be rendered to different attached layered images.

Geometry shaders reside between the vertex shaders (or the optional Tessellation stage) and the fixed-function vertex post-processing stage. The GS is optional; if it is not present, vertices passed from prior stages are given to the next stage directly.

The main reasons to use a GS are:

· Layered rendering: Layer rendering takes one primitive and renders it to multiple images without having to change bound render targets.

· Transform Feedback: This is employed for doing computational tasks on the GPU.

With OpenGL 4.0, GS has two new features. First being the ability to write to multiple output streams and second, GS instancing, which allows multiple invocations to operate over the same input primitive.

3. Vertex shader - The vertex shader is a programmable shader stage in the rendering pipeline that handles the processing of individual vertices. Vertex shaders are fed with vertex attribute data, as specified from a vertex array object by a drawing command. A vertex shader receives a single vertex from the vertex stream and generates a single vertex to the output vertex stream. There must be a 1:1 mapping from input vertices to output vertices.

Vertex shaders typically perform transformations to post-projection space, for consumption by the vertex post-processing stage. They can also be used to do per-vertex lighting, or to perform setup work for later shader stages.

4. G-buffer - The G-buffer is used to store lighting-relevant data for the final lighting pass. There is no limit in OpenGL to what we can store in a texture so it makes sense to store all per-fragment data in one or multiple screen-filled textures called the G-buffer and use these later in the lighting pass. As the G-buffer textures will have the same size as the lighting pass's 2D quad we get the exact same fragment data we'd had in a forward rendering setting, but this time in the lighting pass; there is a one on one mapping.

5. Graphic pipeline - A computer graphics pipeline or rendering pipeline is a conceptual model that explains what steps a graphics system needs to perform to render a 3D scene to a 2D screen. Once a 3D model has been created, for example in a video game or any other 3D computer animation, the graphics pipeline is the means of turning that 3D model into computer displayable content. Since the steps required for this operation depend on the software and hardware utilized and the preferred display characteristics, there is no universal graphics pipeline which will be good fit for all scenarios. However, graphics application programming interfaces (APIs) such as Direct3D and OpenGL were created to unify similar steps and to control the graphics pipeline of a given hardware accelerator. These APIs abstract the underlying hardware and keep the programmer away from writing code to manipulate the graphics hardware accelerators (AMD/Intel/NVIDIA etc.).

The model of the graphics pipeline is usually used in real-time rendering. Often, most of the pipeline steps are implemented in hardware, which allows for special optimizations. The term "pipeline" is used in a similar sense to the pipeline in processors: the individual steps of the pipeline run parallel but are blocked until the slowest step has been completed.

Implementing Deferred Rendering

The implementation process of deferred rendering comprises primarily of two passes/stages: geometry pass/stage, lighting pass/stage. Each stage uses programmable pipeline functionality through vertex and/or fragment shader. Furthermore, each stage communicates with the other through a shared memory area in the video memory of the graphics card.

For each fragment, data including a position vector, a normal vector, a color vector and a specular intensity value is stored. In the geometry pass, all objects of the scene are rendered and these data components are stored in the G-buffer. Multiple render targets or MRT can be used to render to multiple color buffers in a single render pass. Multiple Render Targets, or MRT, a feature of graphics processing units (GPUs), allows the programmable rendering pipeline to render images to multiple render target textures at a single instant. MRT stores the information required for lighting calculations in multiple render targets, which are used to calculate the lit final image. The geometry stage alone makes use of 3D geometric data. Hence, mesh of the scene to be rendered forms the input of this stage and the output is the G-buffer filled in with information required to shade all pixels that contribute to the final image.

In the geometry pass, a frame buffer object is initialized. The framebuffer or the G-buffer has multiple color buffers attached to it. This is followed by rendering into the G-buffer. In order to fill the G-buffer with the required information, it is necessary to set the G-buffer as the current render target. Raw geometry information (only vertices without normals, texture coordinates and materials) is sent to the graphics card using the fixed function pipeline which only updates the G-buffer's depth buffer. Next, material and geometric information of the scene are sent to the graphics card and further a fragment shader fills the rest of the G-buffer's data.

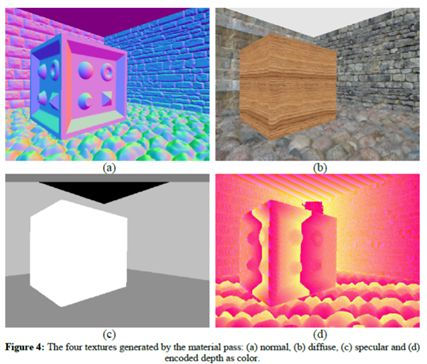

Completion of the geometric pass results into four texture maps (G-buffer contents) namely normal, diffuse, specular and depth maps. These will form the input of the next stage, the lighting pass.

The first step in the lighting pass, the ambient pass, initializes all the pixels in the buffer. This is followed by an optimization. For rendering the lighting pass, scissors rectangle is a good optimization to use. This optimization processes every sample and then discards fragments that fall outside of a certain rectangular portion of the screen. When enabled, all fragments outside the scissors rectangle will be discarded before the fragment shader is executed saving a lot of processing. The scissors rectangle computation is done on the CPU before rendering each light source. The next step, the illumination pass, accumulates all light's contributions. For that, a screen-aligned quadrilateral matching the current render target resolutions is rendered for each light. And, during the rendering, the information stored in the G-buffer is used to shade the current quadrilateral.

Deferred shading does not require the knowledge of what geometry is illuminated by what light and we could process all lights in on ago. With direct rendering, the contribution of each light can be added one pass per light source. Using one pass per light allows shadows but is less efficient than processing multiple lights in a single pass. But, when one has a small scene and not too many lights to work with, deferred rendering is not necessarily faster and sometimes becomes even slower as the overhead outweighs the benefits of deferred rendering. For complex scenes deferred rendering quickly becomes a significant implementation owing to more advanced optimization extensions.

Where do we see Deferred Rendering?

With all those advantages and smooth rendering processes, deferred rendering is a not a term many outside the computer graphics world have heard. But they have seen it in action. For example, when it is raining in the game, water is stagnant in some areas and water splashes when a character runs on it. That is deferred rendering. Deferred rendering has become the rendering of choice and can be seen in every game we have today. Use of the deferred rendering technique has increased in video games because it provides a good level of control in terms of using a large amount of dynamic lights and reducing the complexity of required shader instructions. Almost all top video game developing companies such as Rockstar Games, Electronic Arts implement this technique. Some examples of popular games using deferred lighting are:

1. Grand Theft Auto V developed by Rockstar North and published by Rockstar Games

2. Assassin's Creed III by Ubisoft

3. Dead Space 3 developed by Visceral Games and published by Electronic Arts

Comentarios